What is AudioCaps?

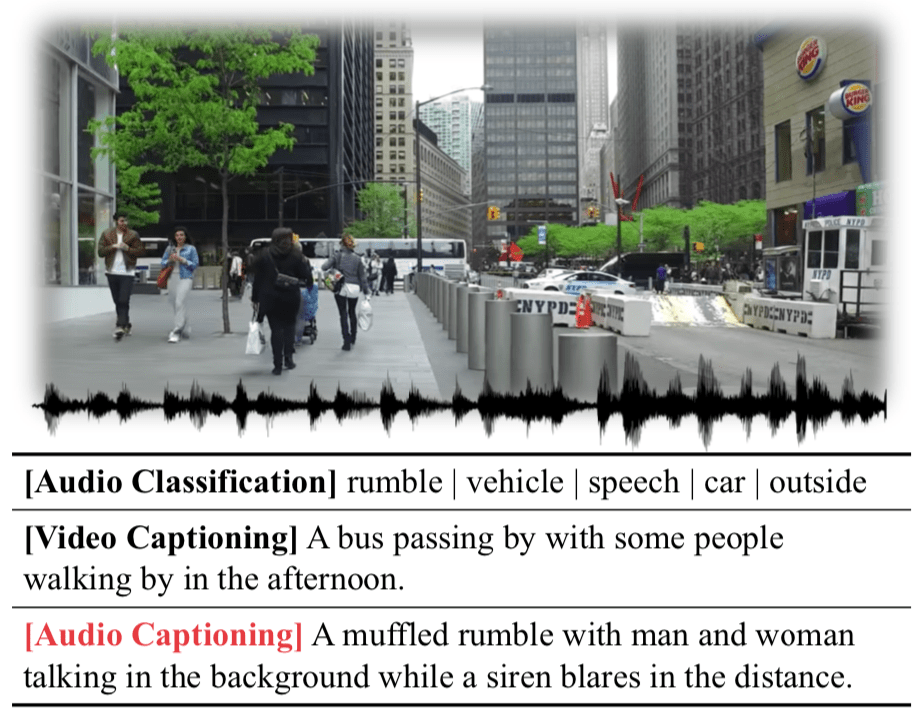

We explore audio captioning: generating natural language description for any kind of audio in the wild.

We contribute AudioCaps, a large-scale dataset of about 46K audio clips to human-written text pairs collected via crowdsourcing on the AudioSet dataset. The collected captions of AudioCaps are indeed faithful for audio inputs.

We provide the source code of the models to explore what forms of audio representation and captioning models are effective for the audio captioning.

Papers

AudioCaps: Generating Captions for Audios in The Wild

Chris Dongjoo Kim, Byeongchang Kim, Hyunmin Lee, and Gunhee Kim NAACL-HLT 2019 (Oral)

Bibtex

@inproceedings{audiocaps,

title={AudioCaps: Generating Captions for Audios in The Wild},

author={Kim, Chris Dongjoo and Kim, Byeongchang and Lee, Hyunmin and Kim, Gunhee},

booktitle={NAACL-HLT},

year={2019}

}

Acknowledgement